From "What Broke?" to "Why and How?" – A 101 on OpenTelemetry

This blog post follows on from the talk I gave on March 24 at the Lambda Nantes meetup on the subject of OpenTelemetry. For me, this talk had two goals.

Firstly, to explain why classic logs aren't efficient enough and how, with more modern solutions, you can improve your observability, both in production and during development phases.

The second objective was to share my experience and give my first “conference”.

#The current state of log management and monitoring

In today’s complex distributed systems, log management and monitoring have become more challenging than ever. Traditional approaches often relied on isolated tools and reactive monitoring practices that alerted us to issues only after they had impacted the system. Logs were typically stored as static files or in cloud platforms, reviewed post-mortem and managed in isolated silos. Each application, or even each instance of an application, generates its own logs without any inherent link to the logs from other services. This siloed approach not only leads to significant data duplication but also causes an unnecessary explosion in costs as storage and processing requirements grow.

Moreover, modern applications demand real-time insights. Conventional monitoring setups struggle to keep up with dynamic environments because they offer fragmented visibility that’s not designed to track the end-to-end flow of a request. This leads to a scenario where developers spend significant time hunting through disparate logs and metrics, often without a clear understanding of what exactly went wrong or why.

In this context, the critical question we’re facing is not just “What broke?” but rather “Why and how did it break?” This shift reflects the need for a deeper, more holistic understanding of system behavior. It’s about moving away from surface-level troubleshooting towards a more comprehensive approach that examines the entire lifecycle of a request, correlates events across services, and ultimately uncovers the underlying causes of failures.

By reframing the problem, we acknowledge that troubleshooting in today’s environments is not about quick fixes. It’s about using observability to gain actionable insights that empower teams to proactively address vulnerabilities and improve system resilience.

#Context and historical background, the rise of distributed systems

But before going into more detail, let's try to understand exactly how we arrived at this point.

In the early of 2010, Google release Dapper. Dapper was developed to provide Google developers with more information about the behavior of distributed systems. These systems, made up of thousands of machines and numerous services developed by different teams, often in a variety of programming languages and spread over several data centers, make it difficult to understand system behavior and solve performance problems. A concrete example is a web search query, which may involve hundreds of query servers and numerous other subsystems. As users are time-sensitive, detecting and correcting performance problems in any subsystem becomes crucial.

The key challenges addressed by Dapper :

- Allow engineers to track how a single request traversed various subsystems.

- Collecting trace data across distributed services, facilitated rapid identification of bottlenecks or service anomalies.

- Minimal manual intervention using transparent instrumentation that reducing the risk of introducing errors during instrumentation implementation.

- High‐volume systems cannot afford extensive instrumentation overhead, Dapper implemented trace sampling. A fraction of requests (often as low as one in a thousand) was recorded, which was deemed sufficient for debugging and analytics while keeping the performance impact minimal. Moreover, an adaptive sampling mechanism dynamically adjusted the sample rate based on workload an approach that underscores the practical balance between observability and efficiency.

Following Dapper, several tools emerged:

- Zipkin (Twitter): Introduced a similar tracing model.

- Jaeger (Uber): Extended the distributed tracing concept.

- OpenCensus (Google): Offered robust telemetry but eventually led to fragmentation.

- OpenTelemetry (fusion of OpenCensus and OpenTracing): OpenTracing and OpenCensus merged in 2019, paving the way for a unified standard.

#Understanding observability beyond logs

The concept of observability has evolved to cover more than traditional logging and monitoring. It now encompasses a set of core principles and signals that provide actionable insights:

-

Context: Observability starts with context propagation, ensuring that the journey of every request is visible across different components. This enables the reconstruction of the request lifecycle and precise pinpointing of where a fault may have occurred.

-

Sampling: As demonstrated by Dapper’s design, sampling is integral to maintaining low overhead while still capturing the necessary data. Through both runtime and collection‑phase sampling, systems can effectively manage data volume without sacrificing the quality of insights.

-

Signals: Observability gathers three main types of signals:

- Traces: Represent a complete picture of a request’s journey, displaying the series of operations across distributed services.

- Metrics: Quantitative data that provides real‑time performance insights.

- Logs: Context‑rich records that offer in‑depth detail about events as they occur.

Together, these elements enable an end‑to‑end perspective on system health, supporting both proactive monitoring and reactive diagnostics.

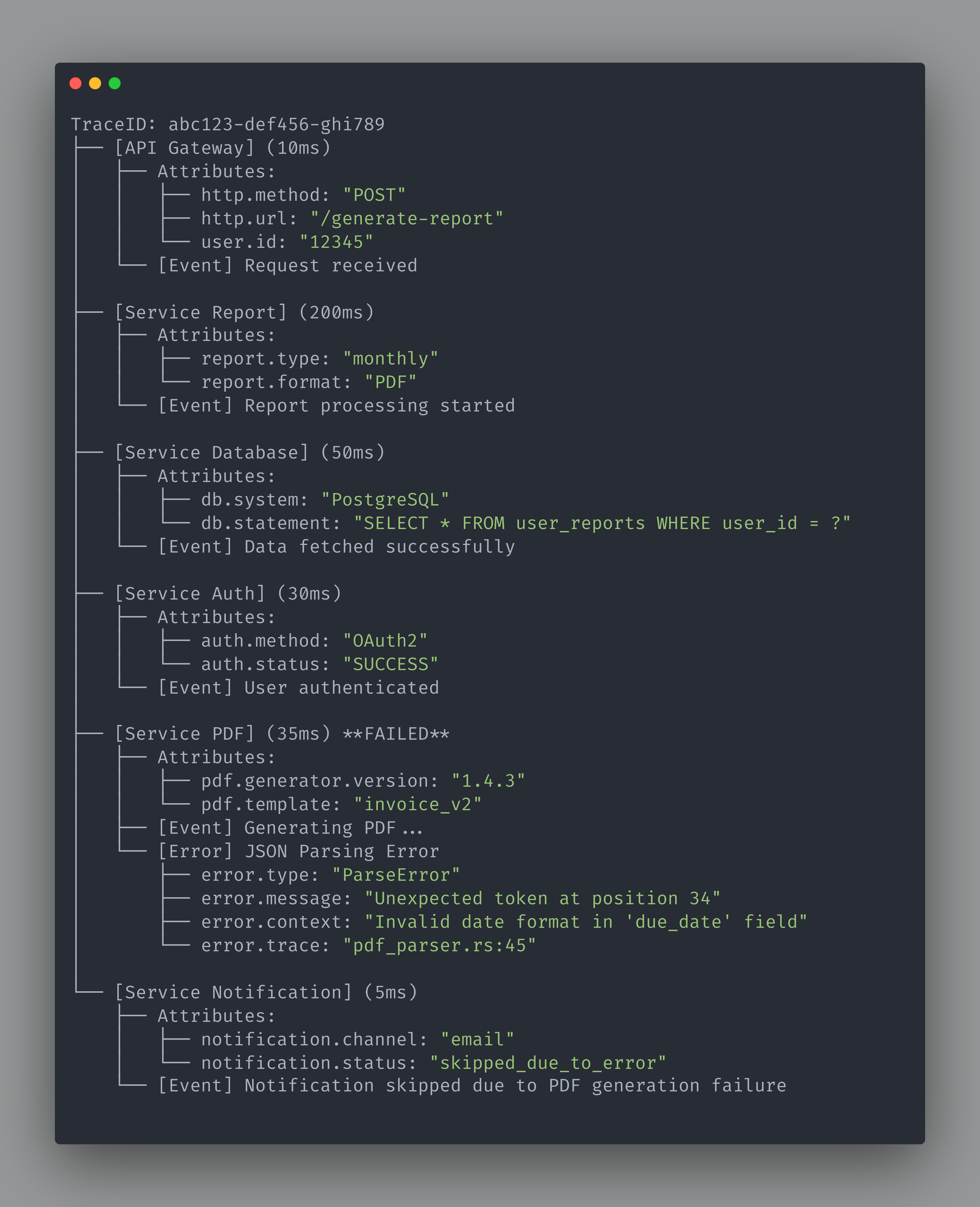

A concrete example (below) is a request to the /generate-report endpoint, which clearly highlight each step from request beginning, passing through PostgreSQL data retrieval, authentication, to PDF generation failure caused by JSON parsing issues. This chain of events not only identifies the service at fault but also reveals the contextual performance metrics (e.g., response times) of surrounding services.

#Addressing the problem how OpenTelemetry provides an answer

At its core, the challenge in modern distributed systems is no longer detecting that a failure has occurred, but rather understanding precisely why and how it happened. OpenTelemetry addresses the modern challenges of distributed systems by providing unified end-to-end observability. By consolidating traces, metrics, and logs into a single framework, it offers a holistic view of requests traversing multiple services. This integration ensures that every step from inception to failure is documented, enabling teams to monitor system behavior in real time and gain a clear understanding of complex interactions.

During early development, before any production deployment, OpenTelemetry offers a way of understanding the complete execution flow of requests. Developers can output trace data directly to stdout without the need to configure a full fledged backend and immediately observe the individual steps a request takes through the system. This immediate feedback loop is not valuable, it enables engineers to quickly identify flows, misconfigurations, issues, or bottlenecks in the code. By visually tracking context propagation and span relationships, debugging becomes a faster, iterative process that significantly shortens development cycles.

When deployed in production, OpenTelemetry continues to enhance system reliability by providing end-to-end observability of every request. Detailed traces capture the complete lifecycle from the entry point of a request to the execution of its final operation. Allowing engineers to pinpoint not only the location of failure but also the sequence of events leading up to an incident. For example, if a PDF generation service (our previous example) experiences an unusual delay due to a JSON parsing error, the trace clearly identifies the problematic component and illustrates how delays propagate across related services. This granular insight is instrumental in rapid incident resolution, reducing both downtime and the mean time to recovery.

Beyond reactive troubleshooting, the continuous aggregation of trace data facilitates proactive monitoring of system performance. In production, adaptive sampling strategies ensure that even under heavy load, the collection of representative telemetry data remains efficient and low overhead.

Integration with real-time monitoring dashboards and alerting systems allow teams to detect performance anomalies or errors that will occur before they escalate into major incidents.

In conclusion, traditional logs of system monitoring are increasingly relegated to displaying only basic technical messages, such as confirming that an application has started or noting connection failures with databases or message brokers, etc. These static log entries are insufficient for tracing the full journey of a request through systems. In contrast, comprehensive traces not only capture every operational detail but also provide real-time, contextual insights that are critical for diagnosing complex issues. Consequently, reliance on traditional logs is waning, as true operational insight and proactive troubleshooting now rest on the detailed, dynamic data provided by OpenTelemetry traces.

A special mention to Xavier, who was a huge support and source of motivation upstream and during the preparation of this talk, and also to Geoffroy and Clément. Geoffroy and Clément, who provided input during the presentation, enabling me to feel at ease and serene throughout.